Hand Tracking And Recognition with OpenCV

Computer Vision is in many ways the ultimate sensor, and has endless potential applications to robotics. Me and 2 classmates (Vegar Østhus and Martin Stokkeland ) did a project in Computer Vision at UCSB and wrote a program to recognize and track finger movements.

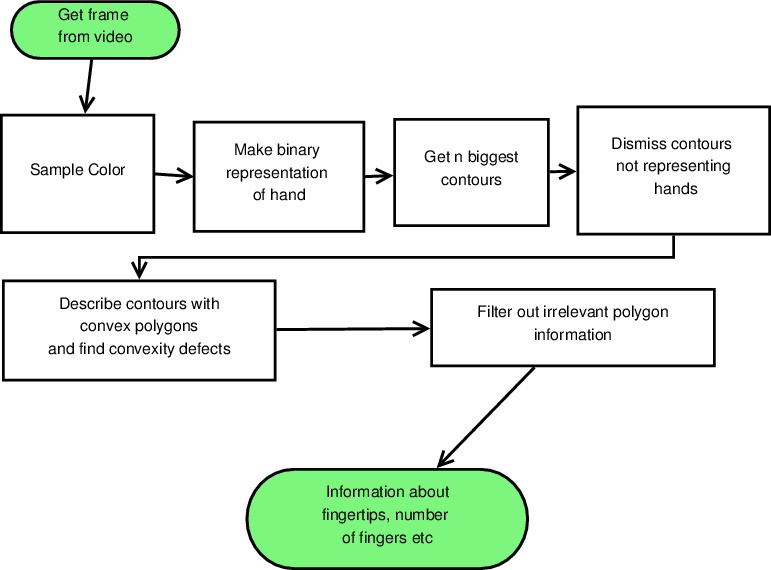

Below is a flowchart representation of the program

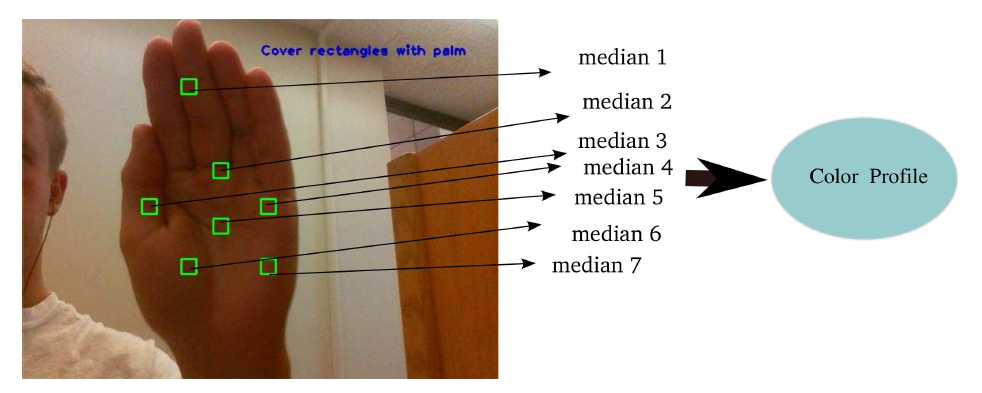

The hand tracking is based on color recognition. The program is therefore initialized by sampling color from the hand:

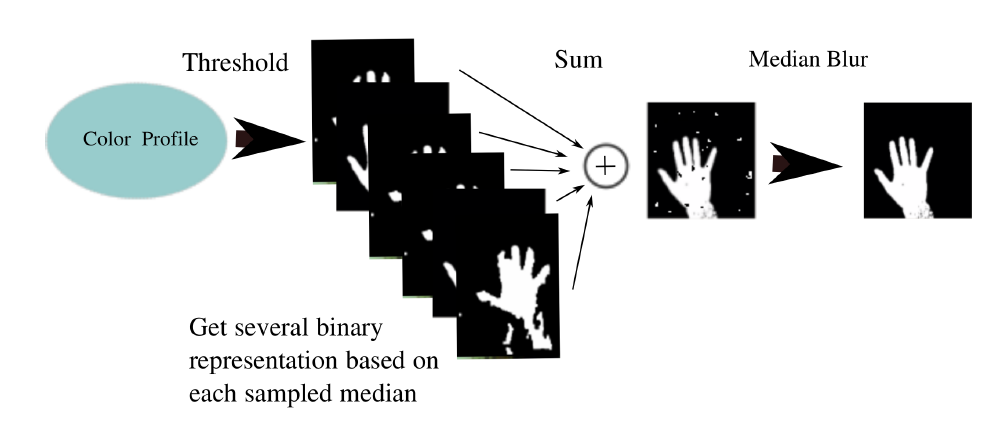

The hand is then extracted from the background by using a threshold using the sampled color profile. Each color in the profile produces a binary image which in turn are all summed together. A nonlinear median filter is then applied to get a smooth and noise free binary representation of the hand.

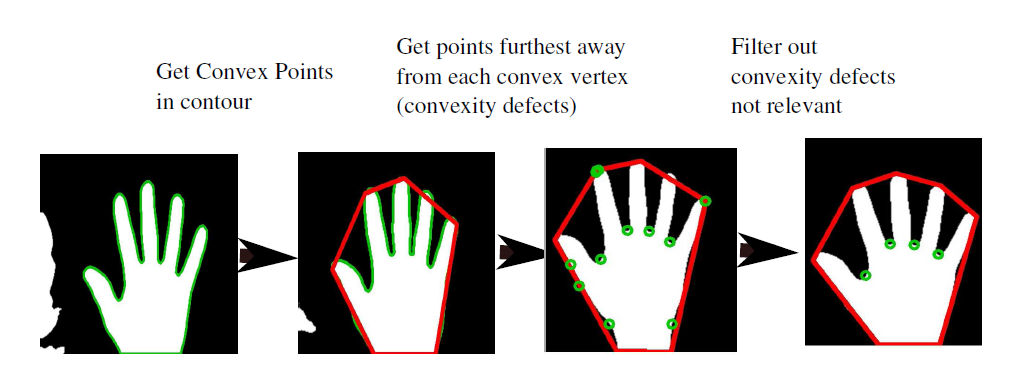

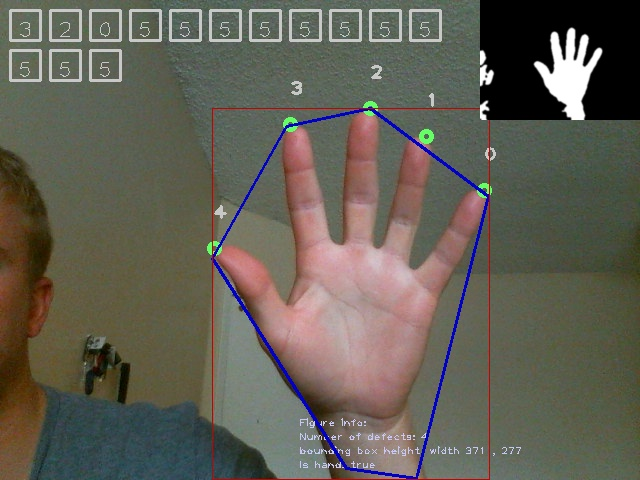

When the binary representation is generated the hand is processed in the following way:

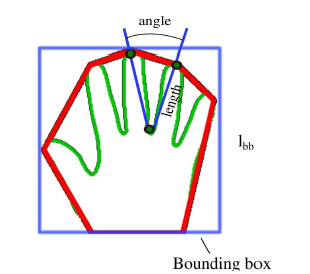

The properties determining whether a convexity defect is to be dismissed is the angle between the lines going from the defect to the neighbouring convex polygon vertices

The defect is dismissed if:

The analyzis results in data that can be of further use in gesture recognition:

- Fingertip positions

- Number of fingers

- Number of hands

- Area of hands

The source code can be found here

blog comments powered by Disqus